Meta, the U.S. company that owns the social media sites Facebook, Instagram and X (formerly Twitter), claims it has taken “significant action” to remove “harmful stereotypes about Jewish people” and to eliminate content that distorts or denies the Holocaust.

“We’ve made progress in fighting antisemitism on our platforms,” Meta claimed recently while acknowledging that “our work is never done.”

This is an understatement, to say the least. Meta’s statement may be sincere, but the fact of the matter is that its social media platforms not only host and promote antisemitic content, but steer users to anti-Jewish tropes and conspiracy theories, according to two new studies released last week by the Anti-Defamation League and the Tech Transparency Project.

The findings appear at a fraught moment. Antisemitic incidents in the United States reached an all-time high in 2022, a 36 percent increase from the previous year. Such is the racist climate today that 20 percent of Americans surveyed by the ADL believe in six or more antisemitic tropes, the highest level in three decades.

In one of the ADL studies, six online accounts for fictional people, four adults and two teenagers, were created on YouTube, Facebook, Instagram and X.

With the exception of YouTube, these platforms pushed hateful suggestions. The more users liked or followed pages, the more antisemitic content they were shown. In other words, these platforms fomented antisemitism.

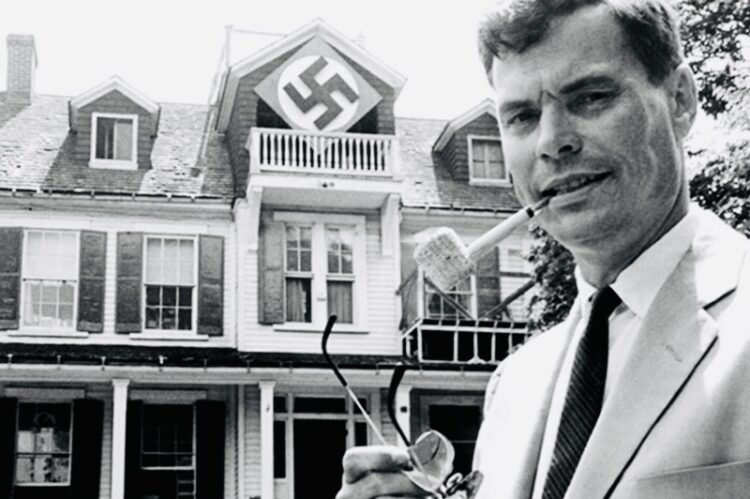

One account recommended on Instagram featured an interview with George Lincoln Rockwell, the late founder and leader of the American Nazi Party.

Part of the problem lies with algorithms — automated systems which recommend content for users and keep them on the platform for a longer period of time.

“Algorithmic antisemitism” is driven by “engagement metrics” such as likes, views, shares and comments, says Matthew Williams, a professor of criminology at Cardiff University and the author of The Science of Hate.

Williams’ explanation flies in the face of assurances from Facebook, Instagram and X that their algorithms produce high-quality content and that they ban hate speech.

Social media platforms should be doing more to curb the spread of online extremism and antisemitism, says Yael Eisenstadt, the vice-president of the Anti-Defamation League and the head of its Center for Technology and Society.

In effect, major social media companies have failed to enforce their own rules with respect to racist content. They might look to YouTube as a role model to combat racial hatred.

YouTube, which has had its own problems with hateful content, has adopted a forward-looking policy from which its competitors can learn.

In a statement, YouTube said, “Over the years, we’ve made significant investments in the systems and processes that enable YouTube to be a more reliable source of high quality information, and we continue to invest in the people, policies and technology that enable us to successfully do this work.”

YouTube has blazed a path that Facebook, Instagram and X should and can follow, but it remains to be seen whether these companies will do what is manifestly right.